Multilayer Perceptron#

A multiclass feed-forward neural network classifier with user-defined hidden layers. The Multilayer Perceptron is a deep learning model capable of forming higher-order feature representations through layers of computation. In addition, the MLP features progress monitoring which stops training when it can no longer improve the validation score. It also utilizes network snapshotting to make sure that it always has the best model parameters even if progress began to decline during training.

Note

If there are not enough training samples to build an internal validation set with the user-specified holdout ratio then progress monitoring will be disabled.

Interfaces: Estimator, Learner, Online, Probabilistic, Verbose, Persistable

Data Type Compatibility: Continuous

Parameters#

| # | Name | Default | Type | Description |

|---|---|---|---|---|

| 1 | hidden | array | An array composing the user-specified hidden layers of the network in order. | |

| 2 | batchSize | 128 | int | The number of training samples to process at a time. |

| 3 | optimizer | Adam | Optimizer | The gradient descent optimizer used to update the network parameters. |

| 4 | l2Penalty | 1e-4 | float | The amount of L2 regularization applied to the weights of the output layer. |

| 5 | epochs | 1000 | int | The maximum number of training epochs. i.e. the number of times to iterate over the entire training set before terminating. |

| 6 | minChange | 1e-4 | float | The minimum change in the training loss necessary to continue training. |

| 7 | window | 5 | int | The number of epochs without improvement in the validation score to wait before considering an early stop. |

| 8 | holdOut | 0.1 | float | The proportion of training samples to use for internal validation. Set to 0 to disable. |

| 9 | costFn | CrossEntropy | ClassificationLoss | The function that computes the loss associated with an erroneous activation during training. |

| 10 | metric | FBeta | Metric | The validation metric used to score the generalization performance of the model during training. |

Example#

use Rubix\ML\Classifiers\MultilayerPerceptron;

use Rubix\ML\NeuralNet\Layers\Dense;

use Rubix\ML\NeuralNet\Layers\Dropout;

use Rubix\ML\NeuralNet\Layers\Activation;

use Rubix\ML\NeuralNet\Layers\PReLU;

use Rubix\ML\NeuralNet\ActivationFunctions\LeakyReLU;

use Rubix\ML\NeuralNet\Optimizers\Adam;

use Rubix\ML\NeuralNet\CostFunctions\CrossEntropy;

use Rubix\ML\CrossValidation\Metrics\MCC;

$estimator = new MultilayerPerceptron([

new Dense(200),

new Activation(new LeakyReLU()),

new Dropout(0.3),

new Dense(100),

new Activation(new LeakyReLU()),

new Dropout(0.3),

new Dense(50),

new PReLU(),

], 128, new Adam(0.001), 1e-4, 1000, 1e-3, 3, 0.1, new CrossEntropy(), new MCC());

Additional Methods#

Return an iterable progress table with the steps from the last training session:

public steps() : iterable

use Rubix\ML\Extractors\CSV;

$extractor = new CSV('progress.csv', true);

$extractor->export($estimator->steps());

Return the loss for each epoch from the last training session:

public losses() : float[]|null

Return the validation score for each epoch from the last training session:

public scores() : float[]|null

Returns the underlying neural network instance or null if untrained:

public network() : Network|null

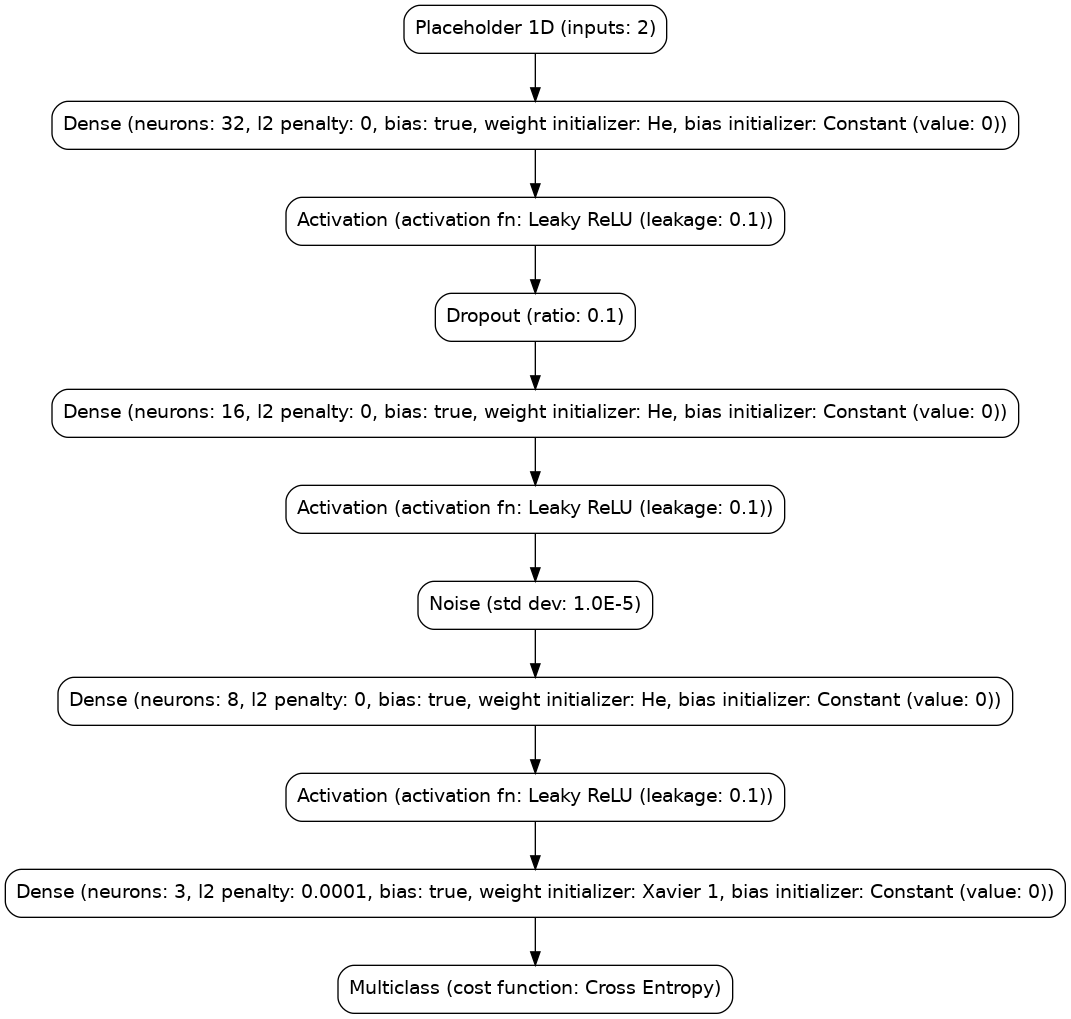

Export a Graphviz "dot" encoding of the neural network architecture.

public exportGraphviz() : Encoding

use Rubix\ML\Helpers\Graphviz;

use Rubix\ML\Persisters\Filesystem;

$dot = $estimator->exportGraphviz();

Graphviz::dotToImage($dot)->saveTo(new Filesystem('network.png'));